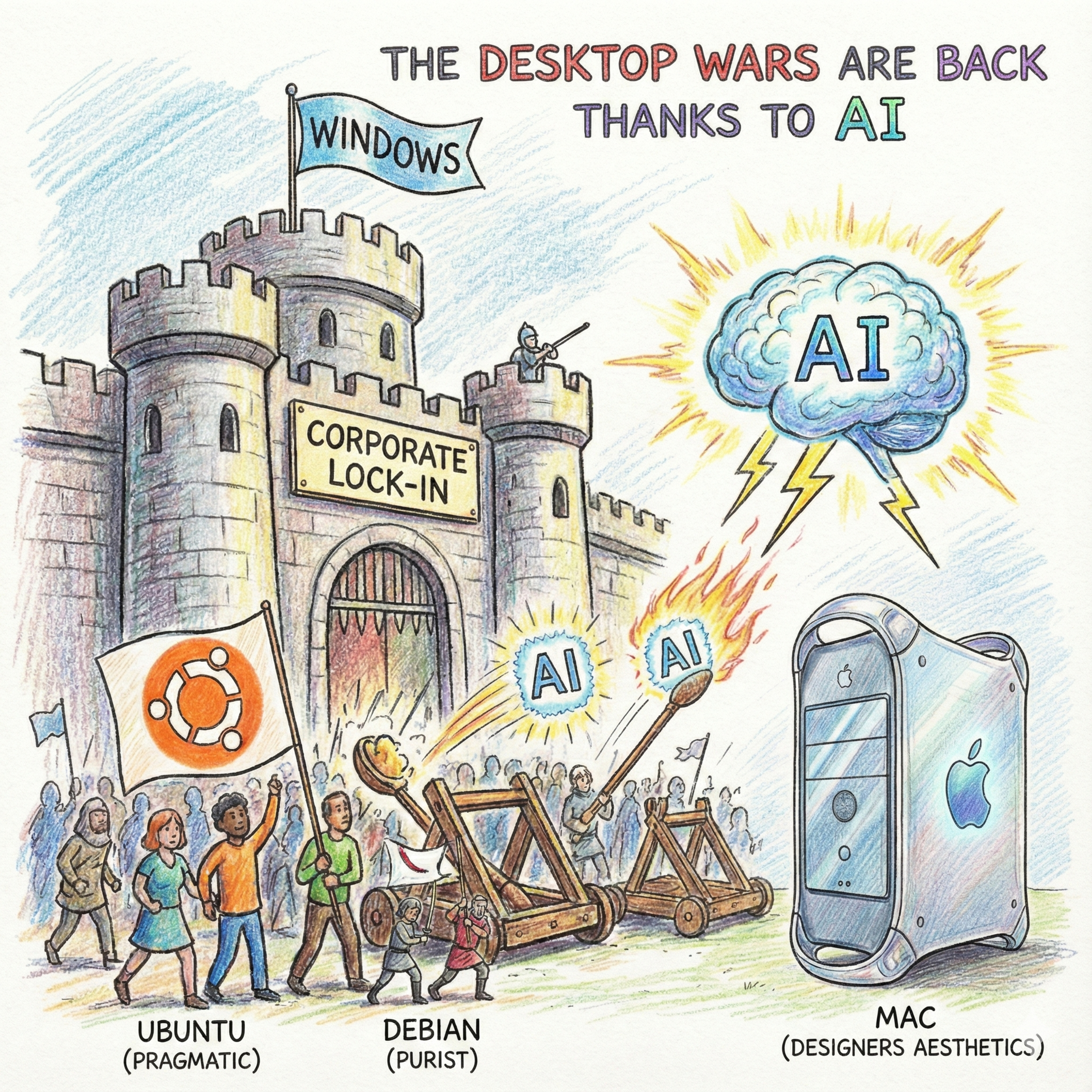

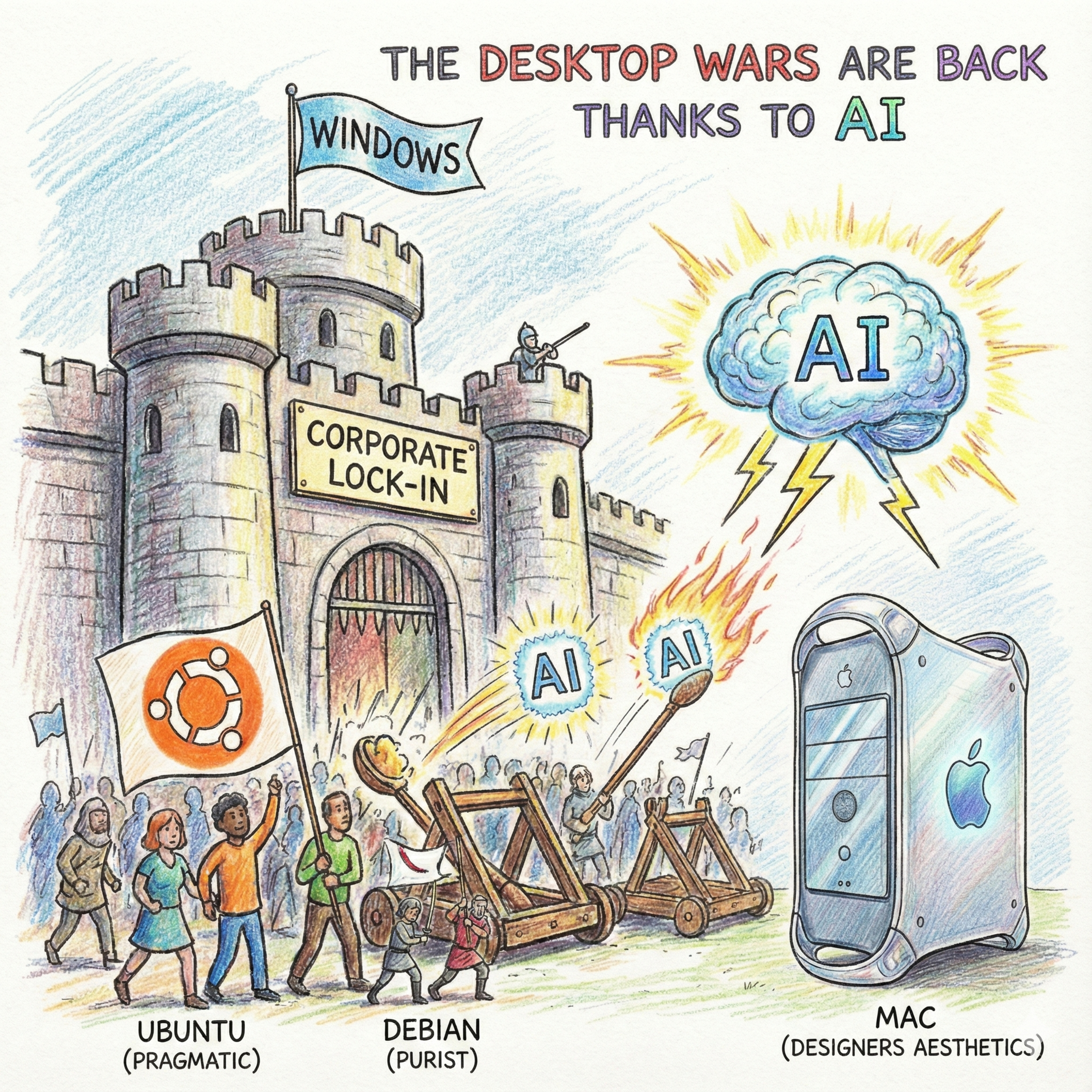

The choice of operating system in 2025 isn't about UI preference; it's a strategic business calculation defined by hardware acceleration, memory capacity, and deployment friction. We conducted an exhaustive analysis of Windows, Ubuntu, Debian, and Apple Silicon to reveal the three specialized domains shaping modern computing: Windows (Client/Stability), Ubuntu (Compute/Throughput), and Apple Silicon (Integrated Efficiency/Capacity).The Great Architectural Divorce: Windows relies on the strict, highly stable WDDM driver model, prioritizing crash prevention (TDR) via kernel abstraction. This safety comes at the cost of throughput. Ubuntu, the undisputed foundational standard for AI, leverages Nvidia’s shift to Open Kernel Modules and GSP offloading. This enables Heterogeneous Memory Management (HMM), shattering the traditional PCIe memory bottleneck and achieving a measurable 5-8% faster generative AI inference speed than Windows through zero-overhead containerization. For high-throughput training, Ubuntu is essential—a truth recognized by Microsoft, whose Azure AI infrastructure runs entirely on hardened Linux images. While WSL2 is a brilliant "Trojan Horse" to keep developers on Windows, the virtualization overhead results in measurable latency lag compared to native Ubuntu.Ubuntu vs. Debian: Agility vs. Purity. We detail why Canonical’s strategy of Hardware Enablement (HWE) kernels and rapid PPA driver releases (like the critical 555 driver that finally brought stable explicit sync to Wayland) makes Ubuntu the pragmatic choice for modern workflows. Debian’s philosophy of frozen driver purity (locked to older versions like 535) creates crippling feature gaps for cutting-edge Nvidia GPUs and demands expert-level manual maintenance (MOK signing for Secure Boot). For enterprise AI, MLOps (Charmed Kubeflow), and embedded robotics (Jetson), Ubuntu is the mandated, certified infrastructure base.The Mac's Memory Superpower: Apple Silicon rejected the Nvidia/PC standard entirely to exploit the crippling 24GB VRAM limit imposed on consumer Nvidia cards. With its Unified Memory Architecture (UMA), the M-Ultra chip can offer up to 192GB of GPU-accessible memory. This capacity allows the Mac to load massive, high-fidelity LLMs (like quantized 70B+ parameter models) locally—tasks that cause single high-end consumer PCs to fail entirely. This positions the Mac as the premier "Local AI Server," prioritizing capacity for huge models over velocity (raw CUDA speed). We reveal the new hybrid workflow: Mac for local LLM inference (MLX) and Ubuntu Server for scalable training (CUDA).The Policy Wall Blockade: The single biggest barrier preventing mass Linux desktop adoption is not technical; it’s political. Proprietary vendors enforce structural incompatibilities. Kernel-level anti-cheat systems (Vanguard, Ricochet) immediately evict the Linux gaming base. Non-negotiable proprietary applications like Adobe Creative Cloud and SolidWorks remain fundamentally Windows-dependent. Until platform-agnostic standards prevail, your OS choice is dictated by whether your workload requires Capacity, Velocity, or proprietary policy compliance. Don't miss this crucial analysis—the future of your workflow depends on it!#AIDevelopment #Nvidia #Ubuntu #AppleSilicon #WSL2 #CUDA #MLX #LinuxGaming #AIEcosystem #DesktopWars #TechAnalysis #LLMInference #HardwareAcceleration #GeForce #Wayland #ProprietarySoftware

Top comments